The pace of AI change continues to accelerate, so for example, say hello to Google’s children.

Google Brain’s researchers created the AutoML, an AI algorithm capable of generating its own AIs, thereby eliminating the need to hire human experts.

Maybe they’re not alien doppelgangers — mirror images of us.

But extraterrestrial life—should it exist—might look “eerily similar to the life we see on Earth,” says Charles Cockell, professor of astrobiology at the University of Edinburgh in Scotland.

Indeed, Cockell’s new book (The Equations of Life: How Physics Shapes Evolution, Basic Books, 352 pages) suggests a “universal biology.” Alien adaptations, significantly resembling terrestrial life—from humanoids to hummingbirds—may have emerged on billions of worlds.

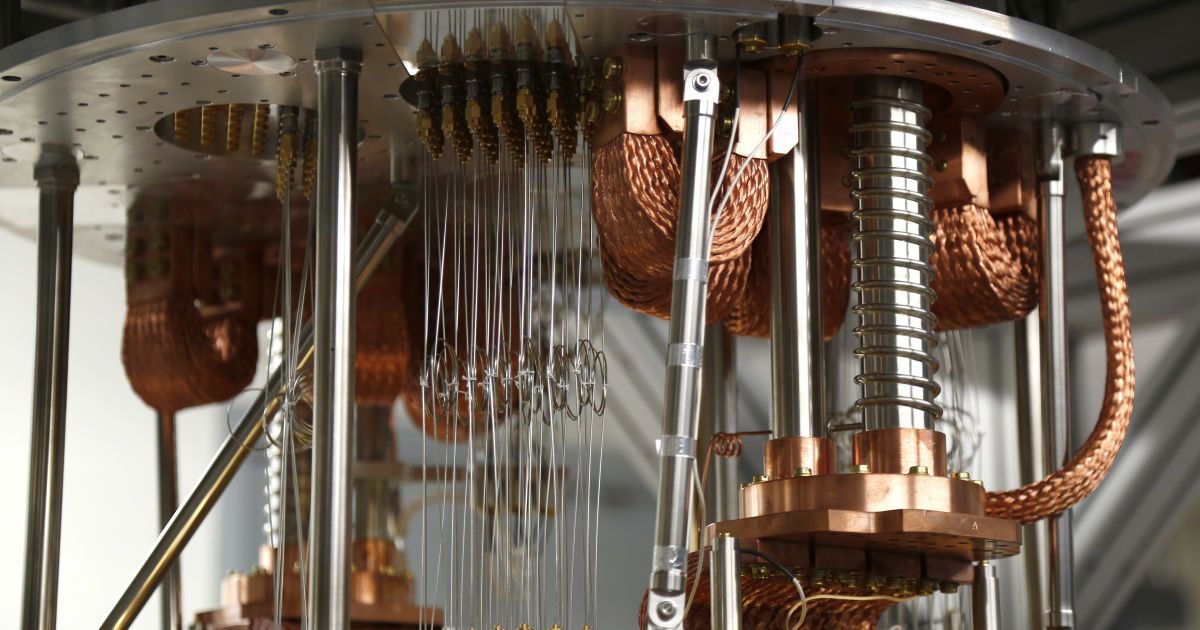

Can the origin of life be explained with quantum mechanics? And if so, are there quantum algorithms that could encode life itself?

We’re a little closer to finding out the answers to those big questions thanks to new research carried out with an IBM supercomputer.

Encoding behaviours related to self-replication, mutation, interaction between individuals, and (inevitably) death, a newly created quantum algorithm has been used to show that quantum computers can indeed mimic some of the patterns of biology in the real world.

It is important to know why a program does what it does. This is not a mystery, technology is a tool and that tool is only as good as the human who created it.

You always have to know why a program, makes the decisions that it makes. No program or Algorithm will be perfect, that is the main issue that Lisa Haven brings forward. You also have to make sure of the reason for the error whether it is innocent or intentional or even criminal.

That is the problem when you blindly allow technology to rule the day. Anyone from an old-school management upbringing will tell you, never to allow technology to govern your decisions on its own. You always have to know why, where, and how on your decisions.

It is important to get this kind of technology right. Everyone wants a shortcut, instead of doing the hard work to ensure that information being put forward is correct.

Quantum computing isn’t going to revolutionize AI anytime soon, according to a panel of experts in both fields.

Different worlds: Yoshua Bengio, one of the fathers of deep learning, joined quantum computing experts from IBM and MIT for a panel discussion yesterday. Participants included Peter Shor, the man behind the most famous quantum algorithm. Bengio said he was keen to explore new computer designs, and he peppered his co-panelists with questions about what a quantum computer might be capable of.

Quantum leaps: The panels quantum experts explained that while quantum computers are scaling up, it will be a while—we’re talking years here—before they could do any useful machine learning, partly because a lot of extra qubits will be needed to do the necessary error corrections. To complicate things further, it isn’t very clear what, exactly, quantum computers will be able to do better than their classical counterparts. But both Aram Harrow of MIT and IBM’s Kristian Temme said that early research on quantum machine learning is under way.

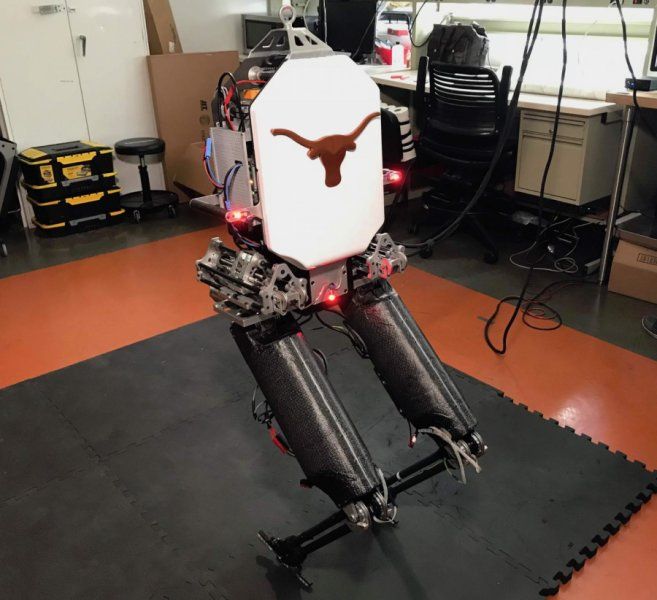

When moving through a crowd to reach some end goal, humans can usually navigate the space safely without thinking too much. They can learn from the behavior of others and note any obstacles to avoid. Robots, on the other hand, struggle with such navigational concepts.

MIT researchers have now devised a way to help robots navigate environments more like humans do. Their novel motion-planning model lets robots determine how to reach a goal by exploring the environment, observing other agents, and exploiting what they’ve learned before in similar situations. A paper describing the model was presented at this week’s IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS).

Popular motion-planning algorithms will create a tree of possible decisions that branches out until it finds good paths for navigation. A robot that needs to navigate a room to reach a door, for instance, will create a step-by-step search tree of possible movements and then execute the best path to the door, considering various constraints. One drawback, however, is these algorithms rarely learn: Robots can’t leverage information about how they or other agents acted previously in similar environments.

By translating a key human physical dynamic skill — maintaining whole-body balance — into a mathematical equation, the team was able to use the numerical formula to program their robot Mercury, which was built and tested over the course of six years. They calculated the margin of error necessary for the average person to lose one’s balance and fall when walking to be a simple figure — 2 centimeters.

“Essentially, we have developed a technique to teach autonomous robots how to maintain balance even when they are hit unexpectedly, or a force is applied without warning,” Sentis said. “This is a particularly valuable skill we as humans frequently use when navigating through large crowds.”

Sentis said their technique has been successful in dynamically balancing both bipeds without ankle control and full humanoid robots.

If simulating the brain is proving tricky, why don’t we try decoding it?

“There’s a good reason the first flying machines weren’t mechanical bats: people tried that, and they were terrible.” — Dan Robitzski

In the current AI Spring, many people and corporations are betting big that the capabilities of deep learning algorithms will continue to improve as the algorithms are fed more data. Their faith is backed by the miracles performed by such algorithms: they can see, listen and do a thousand other things that were previously considered too difficult for AI.

Our guest for the third episode of the AGI Podcast, Pascal Kaufmann, is amongst those who believe such faith in deep learning is misplaced.