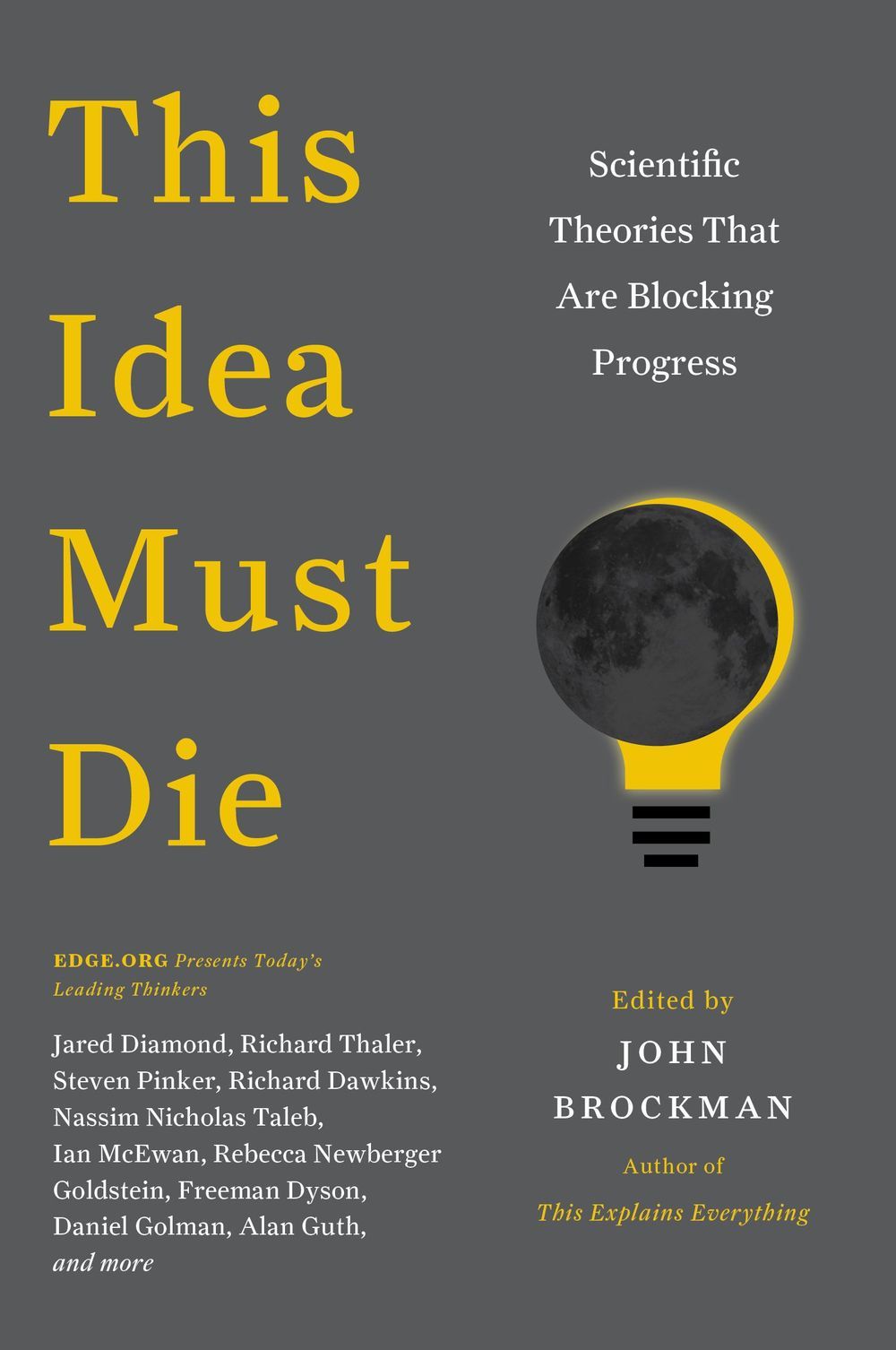

Circa 2018

The experimental mastery of complex quantum systems is required for future technologies like quantum computers and quantum encryption. Scientists from the University of Vienna and the Austrian Academy of Sciences have broken new ground. They sought to use more complex quantum systems than two-dimensionally entangled qubits and thus can increase the information capacity with the same number of particles. The developed methods and technologies could in the future enable the teleportation of complex quantum systems. The results of their work, “Experimental Greenberger-Horne-Zeilinger entanglement beyond qubits,” is published recently in the renowned journal Nature Photonics.

Similar to bits in conventional computers, qubits are the smallest unit of information in quantum systems. Big companies like Google and IBM are competing with research institutes around the world to produce an increasing number of entangled qubits and develop a functioning quantum computer. But a research group at the University of Vienna and the Austrian Academy of Sciences is pursuing a new path to increase the information capacity of complex quantum systems.

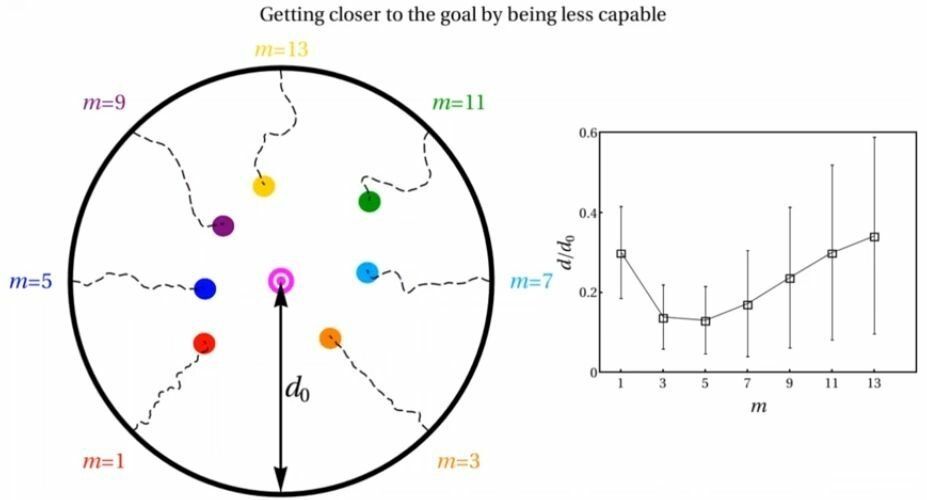

The idea behind it is simple: Instead of just increasing the number of particles involved, the complexity of each system is increased. “The special thing about our experiment is that for the first time, it entangles three photons beyond the conventional two-dimensional nature,” explains Manuel Erhard, first author of the study. For this purpose, the Viennese physicists used quantum systems with more than two possible states—in this particular case, the angular momentum of individual light particles. These individual photons now have a higher information capacity than qubits. However, the entanglement of these light particles turned out to be difficult on a conceptual level. The researchers overcame this challenge with a groundbreaking idea: a computer algorithm that autonomously searches for an experimental implementation.