The problem concerns the mathematical properties of solutions to the Navier–Stokes equations, a system of partial differential equations that describe the motion of a fluid in space. Solutions to the Navier–Stokes equations are used in many practical applications. However, theoretical understanding of the solutions to these equations is incomplete. In particular, solutions of the Navier–Stokes equations often include turbulence, which remains one of the greatest unsolved problems in physics, despite its immense importance in science and engineering.

Category: information science – Page 25

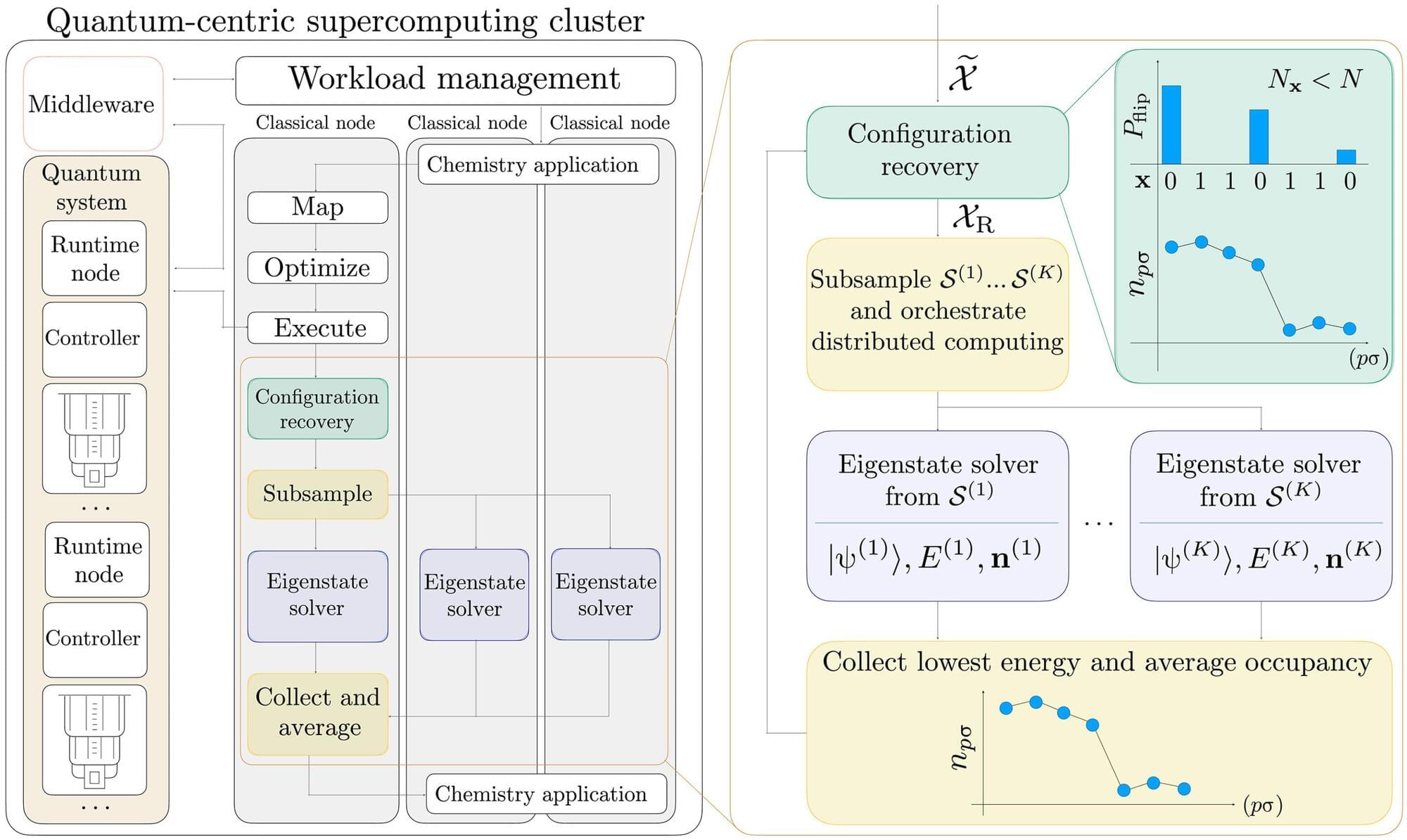

New hybrid quantum–classical computing approach used to study chemical systems

Caltech professor of chemistry Sandeep Sharma and colleagues from IBM and the RIKEN Center for Computational Science in Japan are giving us a glimpse of the future of computing. The team has used quantum computing in combination with classical distributed computing to attack a notably challenging problem in quantum chemistry: determining the electronic energy levels of a relatively complex molecule.

The work demonstrates the promise of such a quantum–classical hybrid approach for advancing not only quantum chemistry, but also fields such as materials science, nanotechnology, and drug discovery, where insight into the electronic fingerprint of materials can reveal how they will behave.

“We have shown that you can take classical algorithms that run on high-performance classical computers and combine them with quantum algorithms that run on quantum computers to get useful chemical results,” says Sharma, a new member of the Caltech faculty whose work focuses on developing algorithms to study quantum chemical systems. “We call this quantum-centric supercomputing.”

Reports in Advances of Physical Sciences

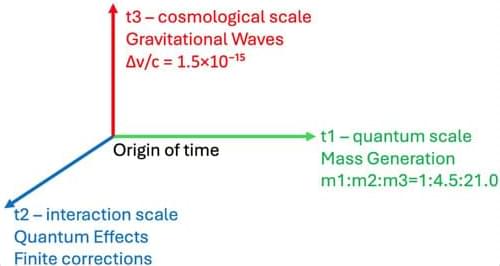

In this paper, the authors propose a three-dimensional time model, arguing that nature itself hints at the need for three temporal dimensions. Why three? Because at three different scales—the quantum world of tiny particles, the realm of everyday physical interactions, and the grand sweep of cosmological evolution—we see patterns that suggest distinct kinds of “temporal flow.” These time layers correspond, intriguingly, to the three generations of fundamental particles in the Standard Model: electrons and their heavier cousins, muons and taus. The model doesn’t just assume these generations—it explains why there are exactly three and even predicts their mass differences using mathematics derived from a “temporal metric.”

This paper introduces a theoretical framework based on three-dimensional time, where the three temporal dimensions emerge from fundamental symmetry requirements. The necessity for exactly three temporal dimensions arises from observed quantum-classical-cosmological transitions that manifest at three distinct scales: Planck-scale quantum phenomena, interaction-scale processes, and cosmological evolution. These temporal scales directly generate three particle generations through eigenvalue equations of the temporal metric, naturally explaining both the number of generations and their mass hierarchy. The framework introduces a metric structure with three temporal and three spatial dimensions, preserving causality and unitarity while extending standard quantum mechanics and field theory.

Tesla Robotaxi Vs. Waymo

Tesla is planning to launch a robo-taxi service in Austin, Texas, which is expected to disrupt the market with its competitive advantages in data collection, cost, and production, shifting the company’s business model towards recurring software revenue ## ## Questions to inspire discussion.

Tesla’s Robotaxi Launch.

🚗 Q: When is Tesla launching its robotaxi service? A: Tesla’s robotaxi launch is scheduled for June 22nd, marking a transformational shift from hardware sales to recurring software revenue with higher margins.

🌆 Q: How will Tesla’s robotaxi service initially roll out? A: The service will start with a small fleet of 10–20 vehicles, scaling up to multiple cities by year-end and millions of cars by next year’s end, with an invite-only system initially. Tesla vs. Waymo.

📊 Q: How does Tesla’s data collection compare to Waymo’s? A: Tesla collects 10 million miles of full self-driving data daily, compared to Waymo’s 250,000 miles, giving Tesla a significant data advantage for training AI and encountering corner cases.

🏭 Q: What production advantage does Tesla have over Waymo? A: Tesla can produce 5,000 vehicles per day, while Waymo has 1,500 vehicles with plans to add 200,000 over the next year, giving Tesla a substantial cost and scale advantage.

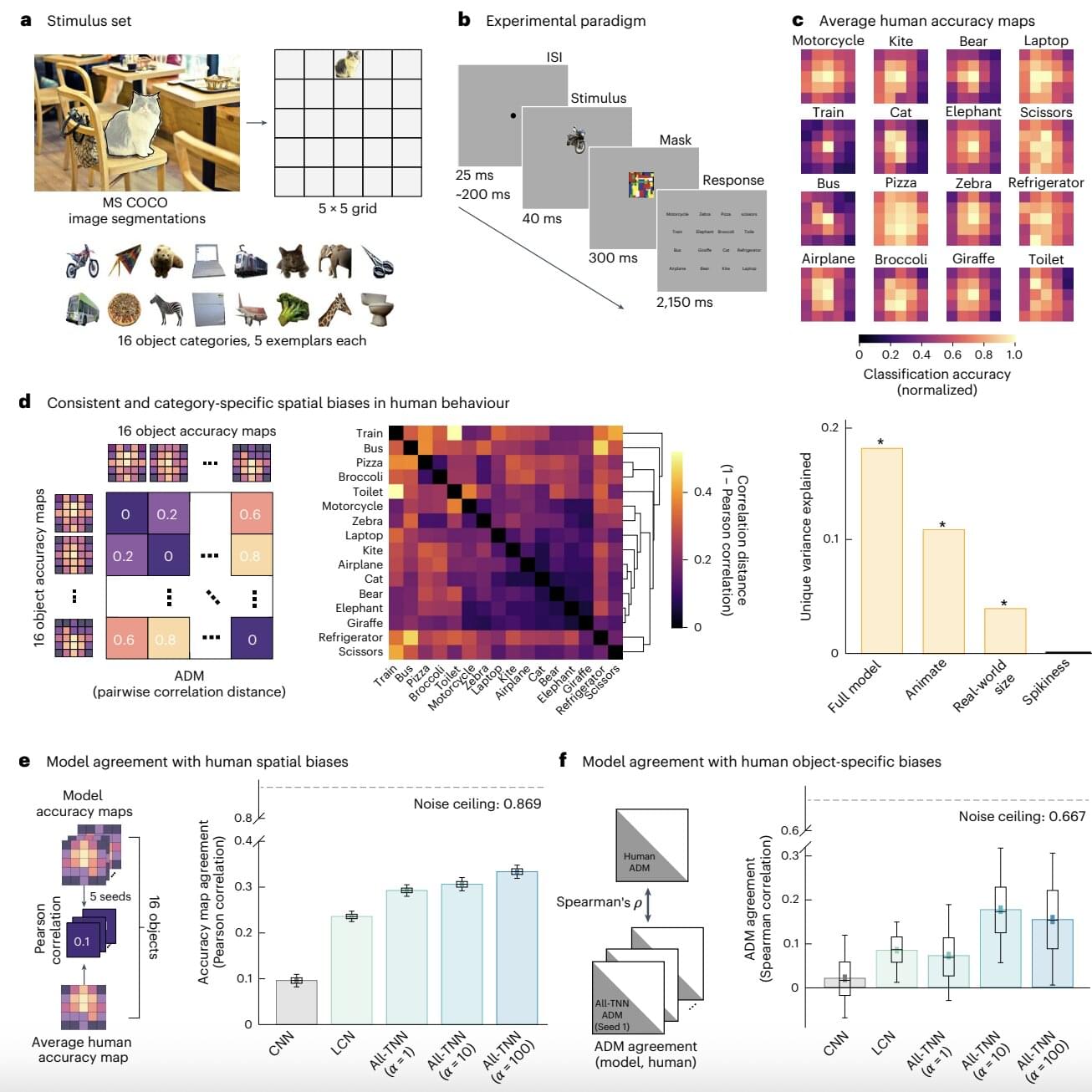

All-topographic neural networks more closely mimic the human visual system

Deep learning models, such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs) are designed to partly emulate the functioning and structure of biological neural networks. As a result, in addition to tackling various real-world computational problems, they could help neuroscientists and psychologists to better understand the underpinnings of specific sensory or cognitive processes.

Researchers at Osnabrück University, Freie Universität Berlin and other institutes recently developed a new class of artificial neural networks (ANNs) that could mimic the human visual system better than CNNs and other existing deep learning algorithms. Their newly proposed, visual system-inspired computational techniques, dubbed all-topographic neural networks (All-TNNs), are introduced in a paper published in Nature Human Behaviour.

“Previously, the most powerful models for understanding how the brain processes visual information were derived off of AI vision models,” Dr. Tim Kietzmann, senior author of the paper, told Tech Xplore.

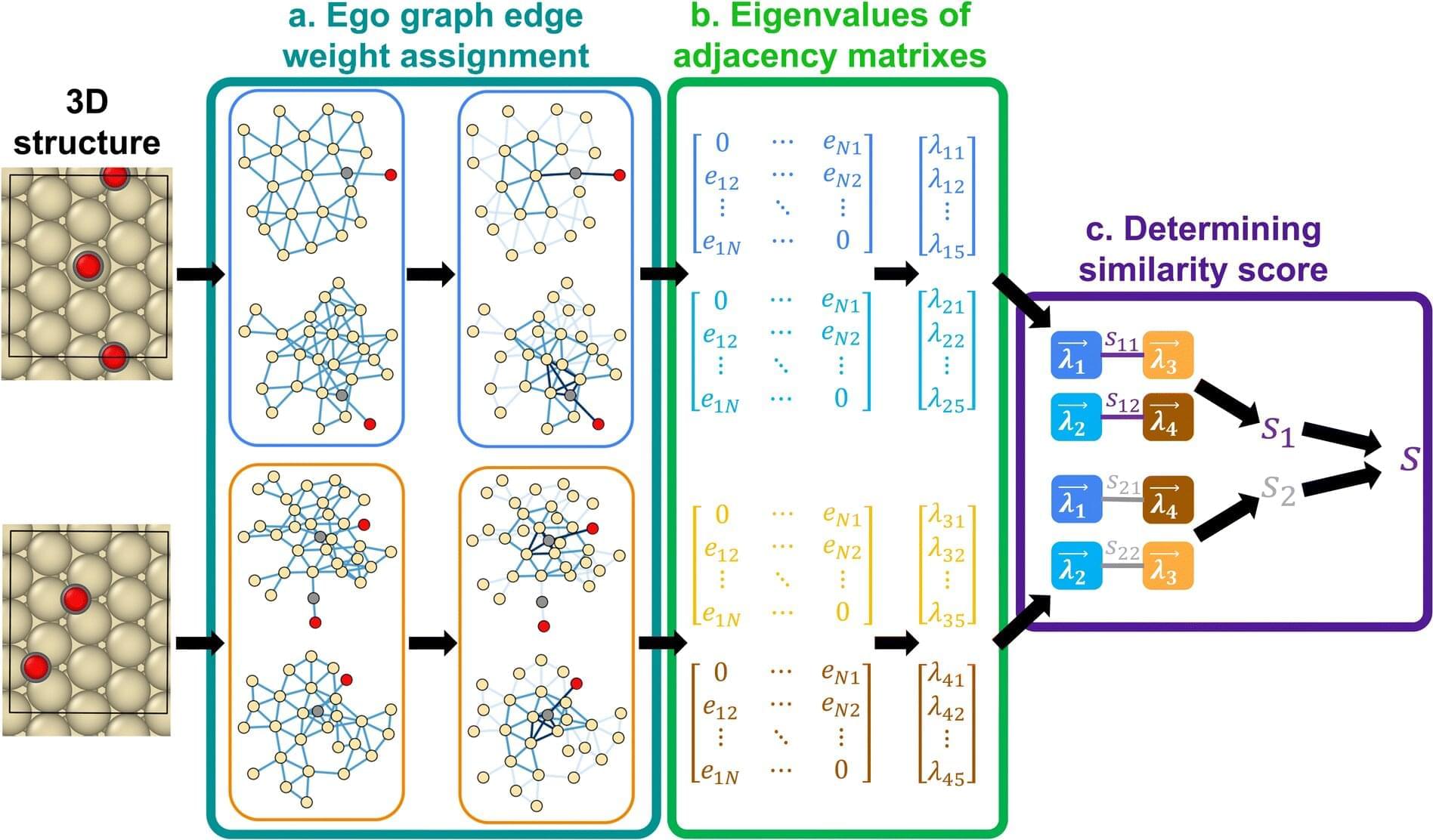

Advanced algorithm to study catalysts on material surfaces could lead to better batteries

A new algorithm opens the door for using artificial intelligence and machine learning to study the interactions that happen on the surface of materials.

Scientists and engineers study the atomic interactions that happen on the surface of materials to develop more energy efficient batteries, capacitors, and other devices. But accurately simulating these fundamental interactions requires immense computing power to fully capture the geometrical and chemical intricacies involved, and current methods are just scratching the surface.

“Currently it’s prohibitive and there’s no supercomputer in the world that can do an analysis like that,” says Siddharth Deshpande, an assistant professor in the University of Rochester’s Department of Chemical Engineering. “We need clever ways to manage that large data set, use intuition to understand the most important interactions on the surface, and apply data-driven methods to reduce the sample space.”

50 Years Later, a Quantum Mystery Has Finally Been Solved

The quantum physics community is buzzing with excitement after researchers at Rice University finally observed a phenomenon that had eluded scientists for over 70 years. This breakthrough, recently published in Science Advances is known as the superradiant phase transition (SRPT), represents a significant milestone in quantum mechanics and opens extraordinary possibilities for future technological applications.

In 1954, physicist Robert H. Dicke proposed an intriguing theory suggesting that under specific conditions, large groups of excited atoms could emit light in perfect synchronization rather than independently. This collective behavior, termed superradiance, was predicted to potentially create an entirely new phase of matter through a complete phase transition.

For over seven decades, this theoretical concept remained largely confined to equations and speculation. The primary obstacle was the infamous “no-go theorem,” which seemingly prohibited such transitions in conventional light-based systems. This theoretical barrier frustrated generations of quantum physicists attempting to observe this elusive phenomenon.

Can space and time emerge from simple rules? Wolfram thinks so

Stephen Wolfram joins Brian Greene to explore the computational basis of space, time, general relativity, quantum mechanics, and reality itself.

This program is part of the Big Ideas series, supported by the John Templeton Foundation.

Participant: Stephen Wolfram.

Moderator: Brian Greene.

0:00:00 — Introduction.

01:23 — Unifying Fundamental Science with Advanced Mathematical Software.

13:21 — Is It Possible to Prove a System’s Computational Reducibility?

24:30 — Uncovering Einstein’s Equations Through Software Models.

37:00 — Is connecting space and time a mistake?

49:15 — Generating Quantum Mechanics Through a Mathematical Network.

01:06:40 — Can Graph Theory Create a Black Hole?

01:14:47 — The Computational Limits of Being an Observer.

01:25:54 — The Elusive Nature of Particles in Quantum Field Theory.

01:37:45 — Is Mass a Discoverable Concept Within Graph Space?

01:48:50 — The Mystery of the Number Three: Why Do We Have Three Spatial Dimensions?

01:59:15 — Unraveling the Mystery of Hawking Radiation.

02:10:15 — Could You Ever Imagine a Different Career Path?

02:16:45 — Credits.

VISIT our Website: http://www.worldsciencefestival.com.

FOLLOW us on Social Media:

Facebook: / worldsciencefestival.

Twitter: / worldscifest.

Instagram: https://www.instagram.com/worldscifest/

TikTok: https://www.tiktok.com/@worldscifest.

LinkedIn: https://www.linkedin.com/company/world-science-festival.

#worldsciencefestival #briangreene #cosmology #astrophysics

How AI & Supercomputing Are Reshaping Aerospace & Finance w/ Allan Grosvenor (MSBAI)

Excellent Podcast interview Allan Grosvenor!…” How Allan built MSBAI to make super computing more accessible.

How AI-driven simulation is speeding up aircraft & spacecraft design.

Why AI is now making an impact in finance & algorithmic trading.

The next evolution of AI-powered decision-making & autonomous systems”

What if AI could power everything from rocket simulations to Wall Street trading? Allan Grosvenor, aerospace engineer and founder of MSBAI, has spent years developing AI-driven supercomputing solutions for space, aviation, defense, and even finance. In this episode, Brent Muller dives deep with Allan on how AI is revolutionizing engineering, the role of supercomputers in aerospace, and why automation is the key to unlocking faster innovation.

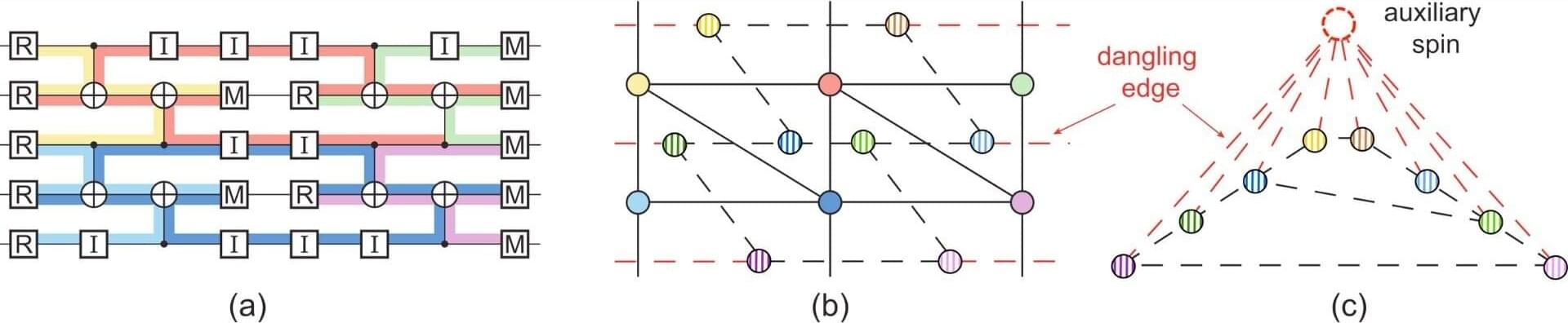

From spin glasses to quantum codes: Researchers develop optimal error correction algorithm

Scientists have developed an exact approach to a key quantum error correction problem once believed to be unsolvable, and have shown that what appeared to be hardware-related errors may in fact be due to suboptimal decoding.

The new algorithm, called PLANAR, achieved a 25% reduction in logical error rates when applied to Google Quantum AI’s experimental data. This discovery revealed that a quarter of what the tech giant attributed to an “error floor” was actually caused by their decoding method, rather than genuine hardware limitations.

Quantum computers are extraordinarily sensitive to errors, making quantum error correction essential for practical applications.