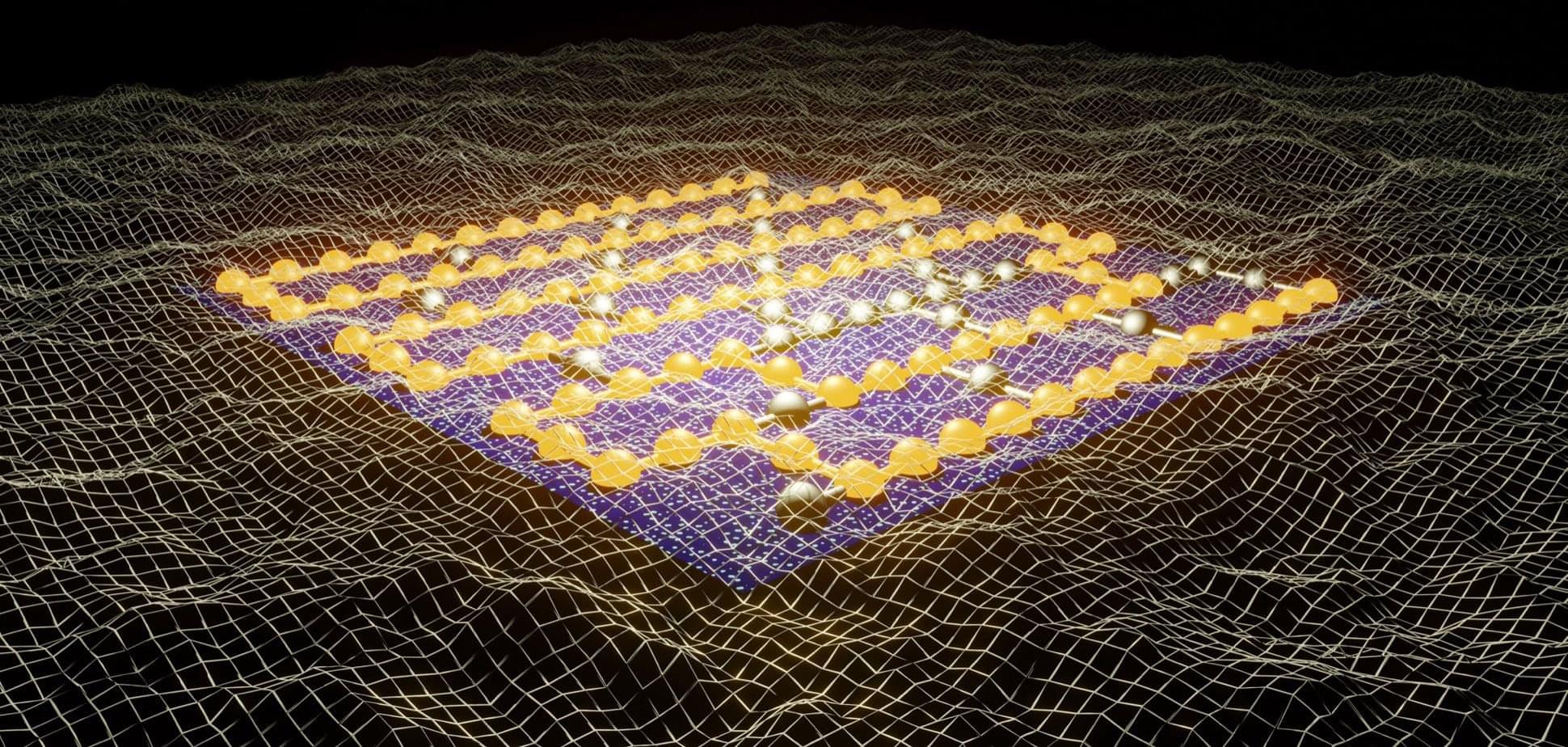

While atmospheric turbulence is a familiar culprit of rough flights, the chaotic movement of turbulent flows remains an unsolved problem in physics. To gain insight into the system, a team of researchers used explainable AI to pinpoint the most important regions in a turbulent flow, according to a Nature Communications study led by the University of Michigan and the Universitat Politècnica de València.

A clearer understanding of turbulence could improve forecasting, helping pilots navigate around turbulent areas to avoid passenger injuries or structural damage. It can also help engineers manipulate turbulence, dialing it up to help industrial mixing like water treatment or dialing it down to improve fuel efficiency in vehicles.

“For more than a century, turbulence research has struggled with equations too complex to solve, experiments too difficult to perform, and computers too weak to simulate reality. Artificial Intelligence has now given us a new tool to confront this challenge, leading to a breakthrough with profound practical implications,” said Sergio Hoyas, a professor of aerospace engineering at the Universitat Politècnica de València and co-author of the study.