Could a year and a half of rumors be leading to the biggest video game reveal of all time?

Category: entertainment – Page 4

THE FOREST KING | Red Iron Road | Full Episode

Set in a suffocating, over-commercialized future, a young boy’s desire for success and status leads him into a terrifying and deadly new reality through the lens of a mysterious VR game.

His desperate father must now race against both time and technology to save his dying son.

But the boy is the only one who can save himself — before becoming another faceless pawn of the Forest King.

Adapted from the poem “The Forest Tsar” (1818) by Vasiliy Zhukovsky.

🎬 THE FOREST KING

The second installment of the animated horror anthology RED IRON ROAD.

Each episode is a standalone short film (10–20 minutes) inspired by European literature, produced with unique creative partners in distinctive visual styles.

🏆 Festival Highlights.

• World Premiere – Nightmares Film Festival (Ohio, USA, 2022)

• Nominations – FilmQuest, Blood in the Snow Film Festival, Animaze.

🌐 Official Sites:

https://www.lakesideanimation.com/for… 🎥 Credits Directed & Written By: Lubomir Arsov Produced By: Lakeside Animation Animation By: Art Light & Riki Group (Petersburg Animation Studio) Musical Score By: Lars Korb Starring: Carlo Rota, Tom Rooney, Jaiden Cannatelli 📖 Full Cast & Crew: https://www.imdb.com/title/tt23845934… 📺 Where to Watch Season 1 of Red Iron Road: Amazon Prime Video (subscription required): https://www.primevideo.com/detail/0PB… TubiTV (free streaming): https://tubitv.com/movies/100014990/r… Plex (free streaming): https://tubitv.com/movies/100014990/r… Hoopla Digital (free with library card): https://www.hoopladigital.com/televis… Kanopy (free via library or university): https://www.kanopy.com/en/product/140… Apple TV (subscription to Prime Video required): https://tv.apple.com 🔔 Subscribe for new animated horror shorts and exclusive content! Follow Red Iron Road & Lakeside Animation: Facebook: / redironroadseries Instagram:

/ redironroadseries TikTok:

/ lakesideanimation LinkedIn:

/ lakeside-animation 🔖 Hashtags: #RedIronRoad #AdultAnimation #Games #Anthology #Animation #AnimatedAnthology #HorrorAnimation #SlavicMythology #AnimatedShortFilm #LakesideAnimation #EuropeanHorror #AnimationStudio #FolkHorror #AnimatedHorror #CreepyTales #ClassicLiterature #AnimationForAdults © 2022 Ghost Train Productions Inc.

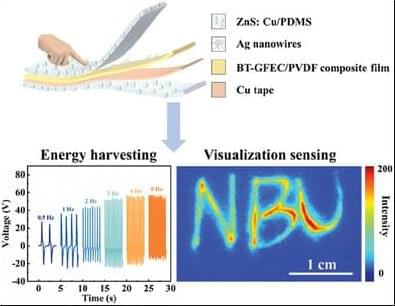

Flexible Piezoelectric Energy Harvesters with Mechanoluminescence for Mechanical Energy Harvesting and Stress Visualization Sensing

Flexible piezoelectric energy harvesters (FPEHs) have wide applications in mechanical energy harvesting, portable device driving, and piezoelectric sensors. However, the poor output performance of piezoelectric energy harvesters and the intrinsic shortcoming of piezoelectric sensors that can only detect dynamic pressure limit their further applications. BaTiO3 (BT) and PVDF are deposited on the glass fiber electronic cloth (GFEC) by impregnation and spin-coating methods, respectively, to form BT-GFEC/PVDF piezoelectric composite films. A mixed solution of mechanoluminescence (ML) particles ZnS: Cu and PDMS are used as the encapsulation layer to construct a high-performance ML-FPEH with self-powered electrical and optical dual-mode response characteristics. Due to the interconnection structure of the piezoelectric films, the prepared ML-FPEH illustrates a high effective energy harvesting performance (≈58 V, ≈43.56 µW cm−2). It can also effectively harvest mechanical energy from human activities. More importantly, ML-FPEH can sense stress distribution of hand-writing via ML to achieve stress visualization, making up for the shortcomings of piezoelectric sensors. This work provides a new strategy for endowing FPEH with dual-mode sensing and energy harvesting.

Best space strategy games, ranked

Conquer the cosmos and lead ships, fleets, and even entire civilizations to victory in the best space strategy games.

Thin solar-powered films purify water by killing bacteria even in low sunlight

Around 4.4 billion people worldwide still lack reliable access to safe drinking water. Newly designed, thin floating films that harness sunlight to eliminate over 99.99% of bacteria could help change that, turning contaminated water into a safe resource and offering a promising solution to this urgent global challenge.

In a recent study, researchers from Sun Yat-sen University, China, presented a self-floating photocatalytic film composed of a specially designed conjugated polymer photocatalyst (Cz-AQ) that generates oxygen-centered organic radicals (OCORs) in water.

These OCORs are efficiently formed due to the strong electron-donating and accepting groups incorporated into the polymer design, resulting in lifetimes orders of magnitude longer than those of conventional reactive oxygen species. With more time to act, the radicals enable the film to break down organic pollutants and suppress bacterial regrowth for at least five days.

Wolfram Was Right About Everything

Full episode with William Hahn: https://youtu.be/3fkg0uTA3qU

As a listener of TOE you can get a special 20% off discount to The Economist and all it has to offer! Visit https://www.economist.com/toe.

Join My New Substack (Personal Writings): https://curtjaimungal.substack.com.

Listen on Spotify: https://tinyurl.com/SpotifyTOE

Become a YouTube Member (Early Access Videos):

https://www.youtube.com/channel/UCdWIQh9DGG6uhJk8eyIFl1w/join.

Support TOE on Patreon: https://patreon.com/curtjaimungal.