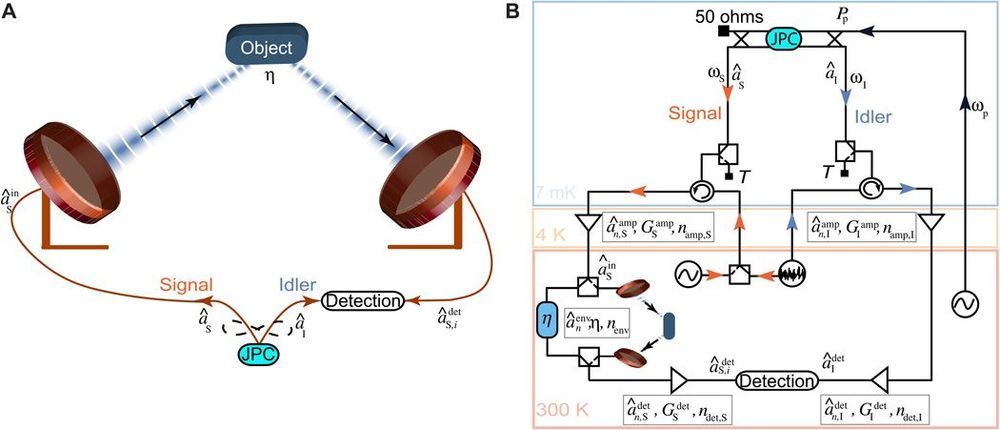

Quantum illumination uses entangled signal-idler photon pairs to boost the detection efficiency of low-reflectivity objects in environments with bright thermal noise. Its advantage is particularly evident at low signal powers, a promising feature for applications such as noninvasive biomedical scanning or low-power short-range radar. Here, we experimentally investigate the concept of quantum illumination at microwave frequencies. We generate entangled fields to illuminate a room-temperature object at a distance of 1 m in a free-space detection setup. We implement a digital phase-conjugate receiver based on linear quadrature measurements that outperforms a symmetric classical noise radar in the same conditions, despite the entanglement-breaking signal path. Starting from experimental data, we also simulate the case of perfect idler photon number detection, which results in a quantum advantage compared with the relative classical benchmark. Our results highlight the opportunities and challenges in the way toward a first room-temperature application of microwave quantum circuits.

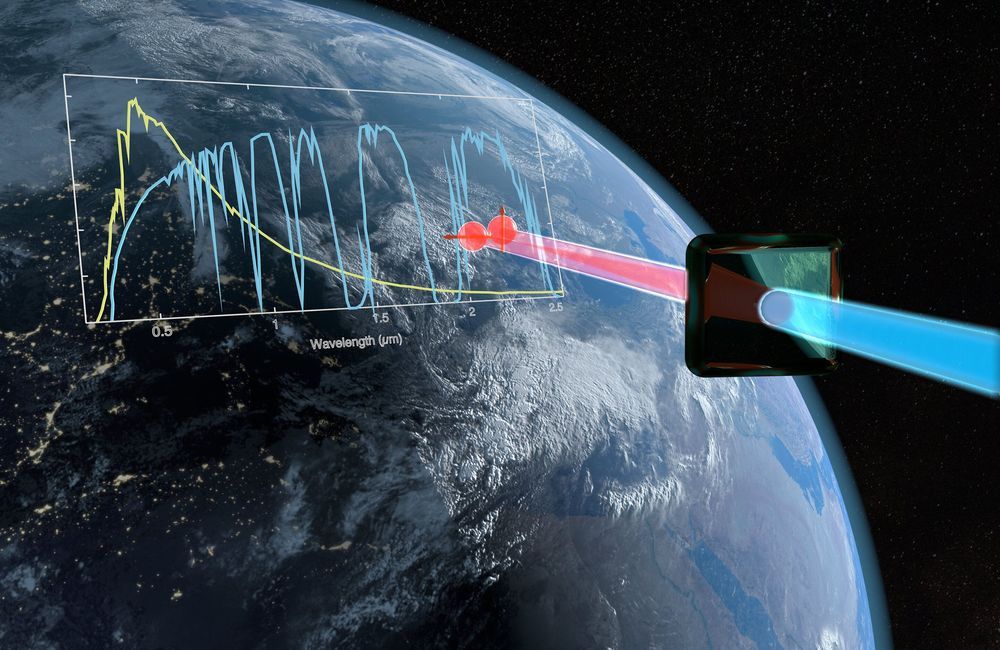

Quantum sensing is well developed for photonic applications (1) in line with other advanced areas of quantum information (2–5). Quantum optics has been, so far, the most natural and convenient setting for implementing the majority of protocols in quantum communication, cryptography, and metrology (6). The situation is different at longer wavelengths, such as tetrahertz or microwaves, for which the current variety of quantum technologies is more limited and confined to cryogenic environments. With the exception of superconducting quantum processing (7), no microwave quanta are typically used for applications such as sensing and communication. For these tasks, high-energy and low-loss optical and telecom frequency signals represent the first choice and form the communication backbone in the future vision of a hybrid quantum internet (8–10).

Despite this general picture, there are applications of quantum sensing that are naturally embedded in the microwave regime. This is exactly the case with quantum illumination (QI) (11–17) for its remarkable robustness to background noise, which, at room temperature, amounts to ∼103 thermal quanta per mode at a few gigahertz. In QI, the aim is to detect a low-reflectivity object in the presence of very bright thermal noise. This is accomplished by probing the target with less than one entangled photon per mode, in a stealthy noninvasive fashion, which is impossible to reproduce with classical means. In the Gaussian QI protocol (12), the light is prepared in a two-mode squeezed vacuum state with the signal mode sent to probe the target, while the idler mode is kept at the receiver.