GLOBAL GROUP ransomware, launched in June 2025, targets industries across multiple regions with AI-powered negotiation and rebranded BlackLock tactics

An international law enforcement action dismantled a Romanian ransomware gang known as ‘Diskstation,’ which encrypted the systems of several companies in the Lombardy region, paralyzing their businesses.

The law enforcement operation codenamed ‘Operation Elicius’ was coordinated by Europol and also involved police forces in France and Romania.

Diskstation is a ransomware operation that targets Synology Network-Attached Storage (NAS) devices, which are commonly used by companies for centralized file storage and sharing, data backup and recovery, and general content hosting.

A new variant of the Konfety Android malware emerged with a malformed ZIP structure along with other obfuscation methods that allow it to evade analysis and detection.

Konfety poses as a legitimate app, mimicking innocuous products available on Google Play, but features none of the promised functionality.

The capabilities of the malware include redirecting users to malicious sites, pushing unwanted app installs, and fake browser notifications.

Threat actors behind the Interlock ransomware group have unleashed a new PHP variant of its bespoke remote access trojan (RAT) as part of a widespread campaign using a variant of ClickFix called FileFix.

“Since May 2025, activity related to the Interlock RAT has been observed in connection with the LandUpdate808 (aka KongTuke) web-inject threat clusters,” The DFIR Report said in a technical analysis published today in collaboration with Proofpoint.

“The campaign begins with compromised websites injected with a single-line script hidden in the page’s HTML, often unbeknownst to site owners or visitors.”

Google Gemini for Workspace can be exploited to generate email summaries that appear legitimate but include malicious instructions or warnings that direct users to phishing sites without using attachments or direct links.

Such an attack leverages indirect prompt injections that are hidden inside an email and obeyed by Gemini when generating the message summary.

Despite similar prompt attacks being reported since 2024 and safeguards being implemented to block misleading responses, the technique remains successful.

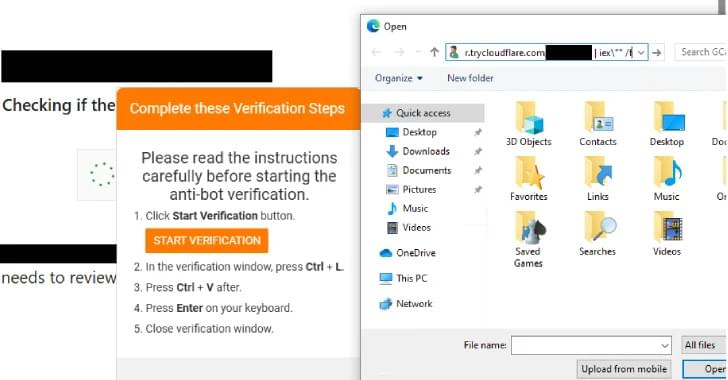

Hackers have adopted the new technique called ‘FileFix’ in Interlock ransomware attacks to drop a remote access trojan (RAT) on targeted systems.

Interlock ransomware operations have increased over the past months as the threat actor started using the KongTuke web injector (aka ‘LandUpdate808’) to deliver payloads through compromised websites.

This shift in modus operandi was observed by researchers at The DFIR Report and Proofpoint since May. Back then, visitors of compromised sites were prompted to pass a fake CAPTCHA + verification, and then paste into a Run dialog content automatically saved to the clipboard, a tactic consistent with ClickFix attacks.

Dozens of Gigabyte motherboard models run on UEFI firmware vulnerable to security issues that allow planting bootkit malware that is invisible to the operating system and can survive reinstalls.

The vulnerabilities could allow attackers with local or remote admin permissions to execute arbitrary code in System Management Mode (SMM), an environment isolated from the operating system (OS) and with more privileges on the machine.

Mechanisms running code below the OS have low-level hardware access and initiate at boot time. Because of this, malware in these environments can bypass traditional security defenses on the system.