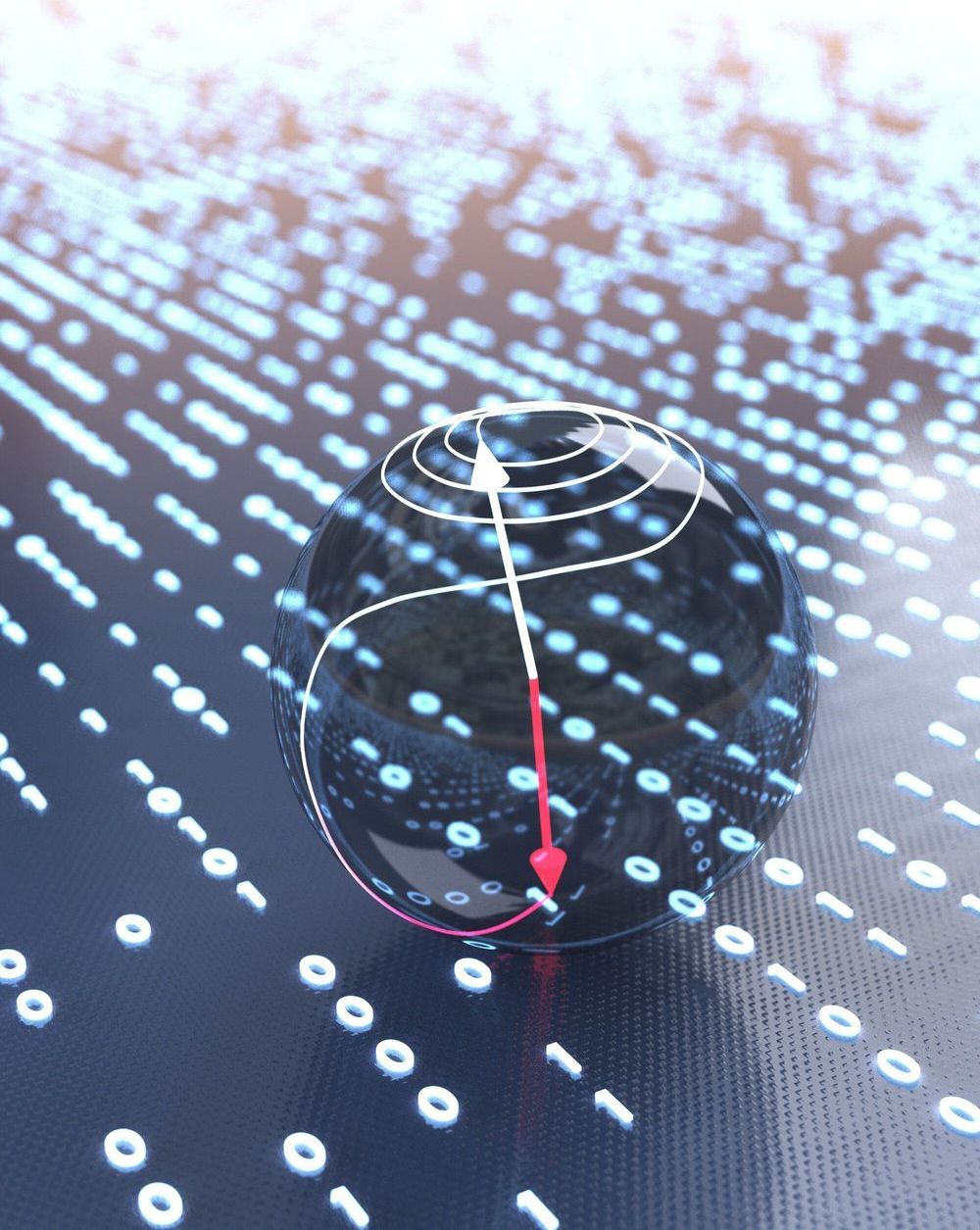

Using a quantum computer, physicists successfully reversed time for an artificial atom. You can even try it at home.

A radically new view articulated now by a number of digital philosophers is that consciousness, quantum computational and non-local in nature, is resolutely computational, and yet, has some “non-computable” properties. Consider this: English language has 26 letters and about 1 million words, so how many books could be possibly written in English? If you are to build a hypothetical computer containing all mass and energy of our Universe and ask it this question, the ultimate computer wouldn’t be able to compute the exact number of all possible combinations of words into meaningful story-lines in billions of years! Another example of non-computability of combinatorics: if you are to be born and live your own life again and again in our Quantum Multiverse, you could live googolplex (10100) lives, but they all would be somewhat different — some of them drastically different from the life you’re living right now, some only slightly — never quite the same, and timeline-indeterminate.

Another kind of non-computability is akin to fuzzy logic but based on pattern recognition. Deeper understanding refers to a situation when a conscious agent gets to perceive numerous patterns in complex environments and analyze that complexity from the multitude of perspectives. That is beautifully encapsulated by Isaiah Berlin’s quote: “To understand is to perceive patterns.” The ability to recognize patterns in chaos is not straightforwardly algorithmic but rather meta-algorithmic and yet, I’d argue, deeply computational. The types of non-computability that I just described may somehow relate to the non-computable element of quantum consciousness to which Penrose refers in his work.

Superfast data processing using light pulses instead of electricity has been created by scientists.

The invention uses magnets to record computer data which consume virtually zero energy, solving the dilemma of how to create faster data processing speeds without the accompanying high energy costs.

Today’s data centre servers consume between 2 to 5% of global electricity consumption, producing heat which in turn requires more power to cool the servers.

Elon Musk’s Neuralink startup raises $39 MILLION as it seeks to develop tech that will connect the human brain with computers…

An Elon Musk-backed startup looking to connect human brains to computers has raised most of its $51 million funding target. According to a report Neuralink has raised $39 million.

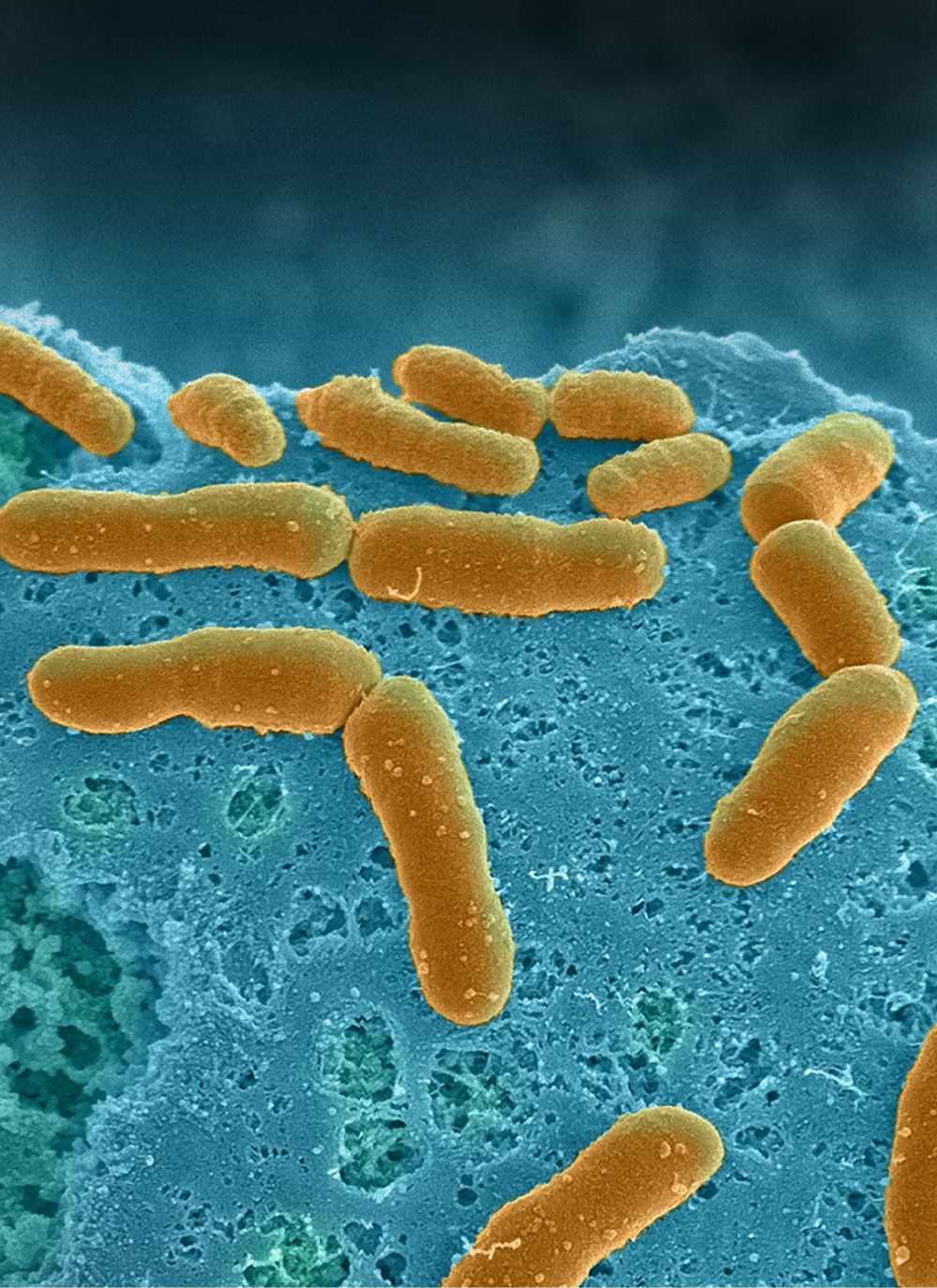

The human microbiome, the huge collection of microbes that live inside and on our body, profoundly affects human health and disease. The human gut flora in particular, which harbor the densest number of microbes, not only break down nutrients and release molecules important for our survival but are also key players in the development of many diseases including infections, inflammatory bowel diseases, cancer, metabolic diseases, autoimmune diseases, and neuropsychiatric disorders.

Most of what we know about human–microbiome interactions is based on correlational studies between disease state and bacterial DNA contained in stool samples using genomic or metagenomic analysis. This is because studying direct interactions between the microbiome and intestinal tissue outside the human body represents a formidable challenge, in large part because even commensal bacteria tend to overgrow and kill human cells within a day when grown on culture dishes. Many of the commensal microbes in the intestine are also anaerobic, and so they require very low oxygen conditions to grow which can injure human cells.

A research team at Harvard’s Wyss Institute for Biologically Inspired Engineering led by the Institute’s Founding Director Donald Ingber has developed a solution to this problem using ‘organ-on-a-chip’ (Organ Chip) microfluidic culture technology. His team is now able to culture a stable complex human microbiome in direct contact with a vascularized human intestinal epithelium for at least 5 days in a human Intestine Chip in which an oxygen gradient is established that provides high levels to the endothelium and epithelium while maintaining hypoxic conditions in the intestinal lumen inhabited by the commensal bacteria. Their “anaerobic Intestine Chip” stably maintained a microbial diversity similar to that in human feces over days and a protective physiological barrier that was formed by human intestinal tissue. The study is published in Nature Biomedical Engineering.

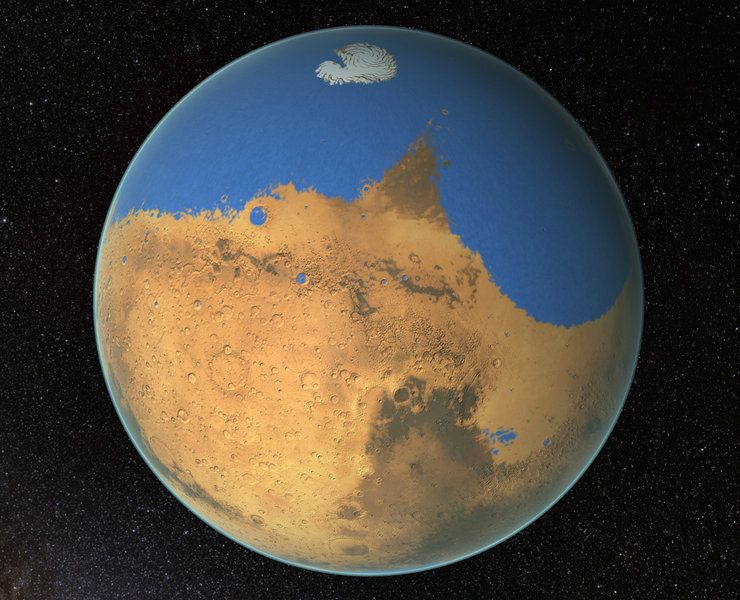

Approximately every two Earth years, when it is summer on the southern hemisphere of Mars, a window opens: Only in this season can water vapor efficiently rise from the lower into the upper Martian atmosphere. There, winds carry the rare gas to the north pole. While part of the water vapor decays and escapes into space, the rest sinks back down near the poles. Researchers from the Moscow Institute of Physics and Technology and the Max Planck Institute for Solar System Research (MPS) in Germany describe this unusual Martian water cycle in a current issue of the Geophysical Research Letters. Their computer simulations show how water vapor overcomes the barrier of cold air in the middle atmosphere of Mars and reaches higher atmospheric layers. This could explain why Mars, unlike Earth, has lost most of its water.

Billions of years ago, Mars was a planet rich in water with rivers, and even an ocean. Since then, our neighboring planet has changed dramatically. Today, only small amounts of frozen water exist in the ground; in the atmosphere, water vapor occurs only in traces. All in all, the planet may have lost at least 80 percent of its original water. In the upper atmosphere of Mars, ultraviolet radiation from the sun split water molecules into hydrogen (H) and hydroxyl radicals (OH). The hydrogen escaped from there irretrievably into space. Measurements by space probes and space telescopes show that even today, water is still lost in this way. But how is this possible? The middle atmosphere layer of Mars, like Earth’s tropopause, should actually stop the rising gas. After all, this region is usually so cold that water vapor would turn to ice. How does the Martian water vapor reach the upper air layers?

In their current simulations, the Russian and German researchers find a previously unknown mechanism reminiscent of a kind of pump. Their model comprehensively describes the flows in the entire gas envelope surrounding Mars from the surface to an altitude of 160 kilometers. The calculations show that the normally ice-cold middle atmosphere becomes permeable to water vapor twice a day—but only at a certain location, and at a certain time of year.

This amazing microchip can heal any part of your body with a single touch.

Follow We Need This on Instagram: https://attn.link/2Mv2ClK