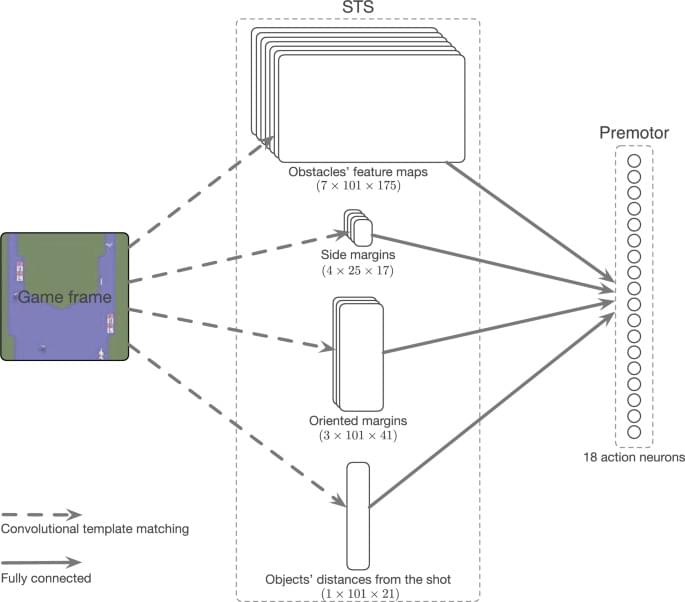

“We’d witness advances like mind-uploading,” B.T. said, and described the process by which the knowledge, analytic skills, intelligence, and personality of a person could be uploaded to a computer chip. “Once uploaded, that chip could be fused with a quantum computer that couples biological with artificial intelligence. If you did this, you’d create a human mind that has a level of computational, predictive, analytic, and psychic skill incomprehensibly higher than any existing human mind. You’d have the mind of God. That online intelligence could then create real effects in the physical world. God’s mind is one thing, but what makes God God is that He cometh to earth —”

When B.T. said earth, he made a sweeping gesture, like a faux preacher, and in his excitement, he knocked over Lily’s glass of wine. A waiter promptly appeared with a handful of napkins, sopping up the mess. B.T. waited for the waiter to leave.

“Don’t give me that look.”