Researchers have been trying to build artificial synapses for years in the hope of getting close to the unrivaled computational performance of the human brain. A new approach has now managed to design ones that are 1,000 times smaller and 10,000 times faster than their biological counterparts.

Despite the runaway success of deep learning over the past decade, this brain-inspired approach to AI faces the challenge that it is running on hardware that bears little resemblance to real brains. This is a big part of the reason why a human brain weighing just three pounds can pick up new tasks in seconds using the same amount of power as a light bulb, while training the largest neural networks takes weeks, megawatt hours of electricity, and racks of specialized processors.

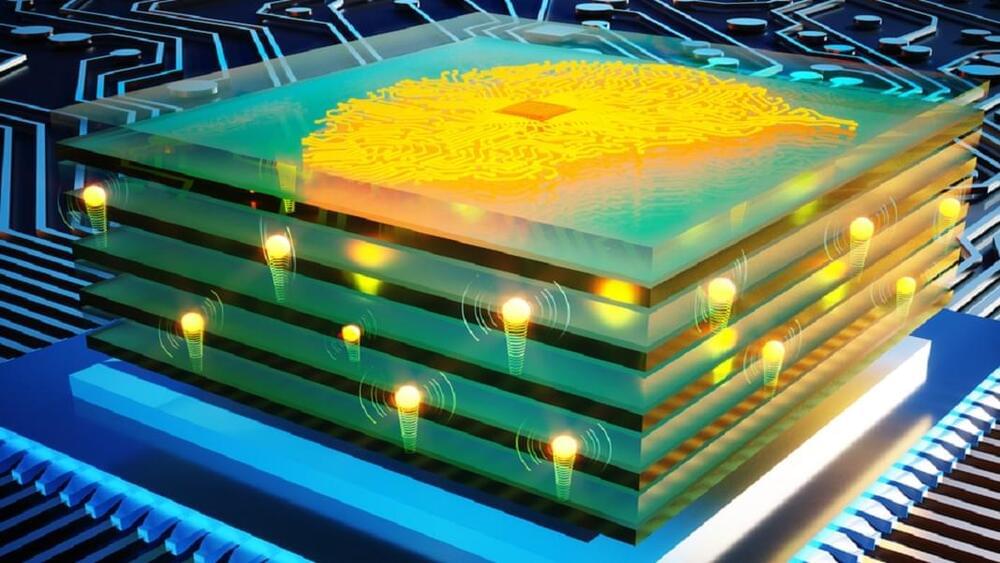

That’s prompting growing interest in efforts to redesign the underlying hardware AI runs on. The idea is that by building computer chips whose components act more like natural neurons and synapses, we might be able to approach the extreme space and energy efficiency of the human brain. The hope is that these so-called “neuromorphic” processors could be much better suited to running AI than today’s computer chips.