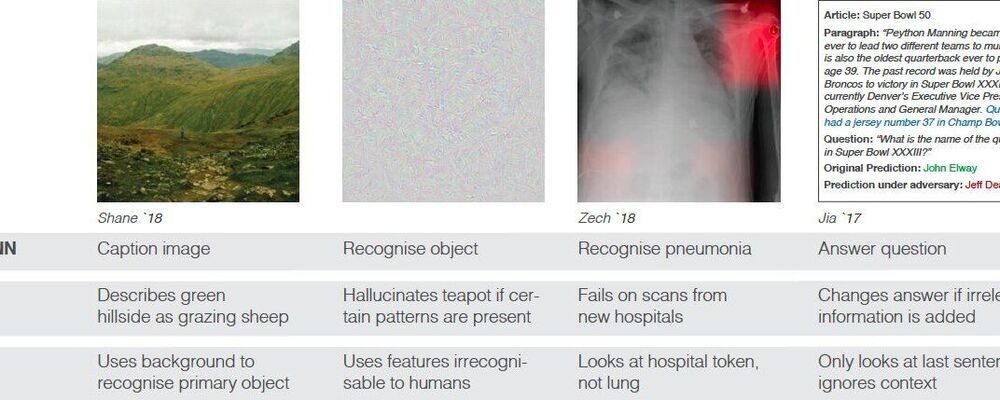

Over the past few years, artificial intelligence (AI) tools, particularly deep neural networks, have achieved remarkable results on a number of tasks. However, recent studies have found that these computational techniques have a number of limitations. In a recent paper published in Nature Machine Intelligence, researchers at Tübingen and Toronto universities explored and discussed a problem known as ‘shortcut learning’ that appears to underpin many of the shortcomings of deep neural networks identified in recent years.

“I decided to start working on this project during a science-related travel in the U.S., together with Claudio Michaelis, a dear colleague and friend of mine,” Robert Geirhos, one of the researchers who carried out the study, told TechXplore. “We first attended a deep learning conference, then visited an animal research laboratory, and finally, a human vision conference. Somewhat surprisingly, we noticed the very same pattern in very different settings: ‘shortcut learning,’ or ‘cheating,’ appeared to be a common characteristic across both artificial and biological intelligence.”

Geirhos and Michaelis believed that shortcut learning, the phenomenon they observed, could explain the discrepancy between the excellent performance and iconic failures of many deep neural networks. To investigate this idea further, they teamed up with other colleagues, including Jörn-Henrik Jacobsen, Richard Zemel, Wieland Brendel, Matthias Bethge and Felix Wichmann.