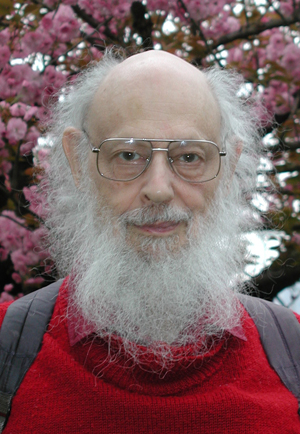

Professor Ray J. Solomonoff

Ray J. Solomonoff, M.S. is the founder of the branch of Artificial Intelligence based on machine learning, prediction, and probability. He circulated the first report on machine learning in 1956.

Ray intended to deliver an invited lecture at the upcoming AGI 2010, the Conference on Artificial General Intelligence (March 5–8 2010) in Lugano. The AGI conference series would not even exist without his essential theoretical contributions. With great sadness AGI 2010 will be held “In Memoriam Ray Solomonoff.” He will live on in the many minds shaped by his revolutionary ideas.

Ray invented algorithmic probability, with Kolmogorov Complexity as a side product, in 1960. He first described his results at a Conference at Caltech, 1960, and in the report A Preliminary Report on a General Theory of Inductive Inference. He clarified these ideas more fully in his 1964 publications: A Formal Theory of Inductive Inference Part I and Part II.

As a young man, Ray decided to major in physics because it was the most general of the sciences, and through it, he could learn to apply mathematics to problems of all kinds. He earned his M.S. in Physics from the University of Chicago in 1950.

Although Ray is best known for Algorithmic Probability and his General Theory of Inductive Inference, he made other important early discoveries, many directed toward his goal of developing a machine that could solve hard problems using probabilistic methods. He wrote three papers, two with Rapoport, in 1950–52, that are regarded as the earliest statistical analysis of networks. They are Structure of Random Nets, Connectivity of Random Nets, and An Exact Method for the Computation of the Connectivity of Random Nets.

He was one of the 10 attendees at the 1956 Dartmouth Summer Research Conference on Artificial Intelligence, the seminal event for artificial intelligence as a field. He wrote and circulated a report among the attendees: An Inductive Inference Machine. It viewed machine learning as probabilistic, with an emphasis on the importance of training sequences, and on the use of parts of previous solutions to problems in constructing trial solutions for new problems. He published a version of his findings in 1957. These were the first papers to be written on Machine Learning.

In the late 1950s, he invented probabilistic languages and their associated grammars. An example of this is his report A Progress Report on Machines to Learn to Translate Languages and Retrieve Information. A probabilistic language assigns a probability value to every possible string. Generalizing the concept of probabilistic grammars led him to his breakthrough discovery in 1960 of Algorithmic Probability.

Prior to the 1960s, the usual method of calculating probability was based on frequency: taking the ratio of favorable results to the total number of trials. In his 1960 publication A Preliminary Report on a General Theory of Inductive Inference, and, more completely, in his 1964 publications A Formal Theory of Inductive Inference Part I and Part II, Ray seriously revised this definition of probability. He called this new form of probability “Algorithmic Probability”.

What was later called Kolmogorov Complexity was a side product of his General Theory. He described this idea in 1960: “Consider a very long sequence of symbols … We shall consider such a sequence of symbols to be ‘simple’ and have a high a priori probability, if there exists a very brief description of this sequence — using, of course, some sort of stipulated description method. More exactly, if we use only the symbols 0 and 1 to express our description, we will assign the probability 2-N to a sequence of symbols if its shortest possible binary description contains N digits.”

In his paper Complexity-based Induction Systems, Comparisons and convergence Theorems, Ray showed that Algorithmic Probability is complete; that is, if there is any describable regularity in a body of data, Algorithmic Probability will eventually discover that regularity, requiring a relatively small sample of that data.

Algorithmic Probability is the only probability system know to be complete in this way. As a necessary consequence of its completeness it is incomputable. The incomputability is because some algorithms — a subset of those that are partially recursive — can never be evaluated fully because it would take too long. But these programs will at least be recognized as possible solutions. On the other hand, any computable system is incomplete. There will always be descriptions outside that system’s search space which will never be acknowledged or considered, even in an infinite amount of time. Computable prediction models hide this fact by ignoring such algorithms.

In 1986 Ray described in the paper The Application of Algorithmic Probability to Problems in Artificial Intelligence how Algorithmic Probability could be used in applications to A.I. He described the search technique he had developed. In search problems, the best order of search, is time Ti Pi, where Ti is the time needed to test the trial and Pi is the probability of success of that trial. He called this the “Conceptual Jump Size” of the problem. Leonid A. Levin’s search technique approximates this order, and so he called this search technique Lsearch.

In other papers he explored how to limit the time needed to search for solutions, writing on resource bounded search. The search space is limited by available time or computation cost rather than by cutting out search space as is done in some other prediction methods, such as Minimum Description Length.

Throughout his career Ray has been concerned with the potential benefits and dangers of A.I., discussing it in many of his published reports. In 1985 his paper The Time Scale of Artificial Intelligence: Reflections on Social Effects analyzed a likely evolution of A.I., giving a formula predicting when it would reach the “Infinity Point”. This Infinity Point is an early version of the “Singularity” later made popular by Ray Kurzweil.

In 1970 Ray formed his own one man company, Oxbridge Research, and has continued his research there except for periods at other institutions such as MIT, University of Saarland in Germany, and IDSIA in Switzerland. In 2003 he was the first recipient of the Kolmogorov Award by The Computer Learning Research Center at the Royal Holloway, University of London, where he gave the inaugural Kolmogorov Lecture. He is currently visiting Professor at the CLRC.

Watch Algorithmic Probability, AI and NKS. Read the full list of his publications.